I stumbled over the announcement of Pipeline Artifacts tasks that were supposed to be superior to the class build

The azure Pipeline Artifact will replace the next generation of build

From several short documents and blog posts I could gather the following information about Pipeline Artifacts:

- Pipeline Artifacts make use of the same technology as Universal Packages

- Packages can be created in the scope over multiple phases in a build

- Effectiveness comes mostly from large build outputs

- Universal Packages make use of client- and server-side deduplication, so I expect the same for Pipeline Artifacts

- The speed of upload and download of files is optimized with parallelization

- Files are only uploaded / download once in the scope of the whole organization. So files get only uploaded once if they are identical

All this sounds promising, so I went out to test the new technology. I also wanted to make this public, so I created a public project on Azure DevOps (which also gives me 10 free parallel pipelines to try this out).

Test Summary

This article describes the tests for the new pipeline

- Two repositories, one with small files and one with larger files. Both around.

- Four pipelines, each are a combination of using large files versus small files with then either using build

artifacts or pipelineartifacts each. - The pipelines use the following task pairs

- each pipeline consisting of seven phases where each phase having the same tasks but running on the agents pools below

- Hosted (Hosted)

- Hosted macOS (Hosted macOS)

- Hosted Ubuntu 1604 (Hosted Ubuntu 1604)

- Hosted VS2017 (Hosted VS2017)

- Hosted Windows 2019 with VS2019 (Hosted Windows 2019 with VS2019)

- Hosted Windows Container (Hosted Windows Container)

- Default (which includes one private agent running on windows 10)

- Triggering 100 builds on all pipelines testing each agent for each pipeline

The results were interesting but I actually expected that at least in some of the cases. They show the different time needed to download artifacts and to publish artifacts.

As you can see, the best performance boost comes with lots of small files in your

Since I tested this for all available agent pools, I crossed checked to see if one agent is faster than the other. The charts below take the average duration for each agent with upload and download tasks for Build and Pipeline Artifacts. What can easily be seen is, that the macOS based agent seems to handle the files the fastest. Why that is I can only

Generating Test Data

This project has two repositories. One with large files and one with small files. I used a basic script from Stephane van Gulick (PowerShell MVP) but changed it, so can create random file size, where I can choose either the total sum size of all files created or choose the maximum and minimum file size for one file and choose how many of them should be created with a random size within that range. The following shows the script for creating the files:

So then those files are uploaded to two different repositories. Now we can work with it. I used this script to create large files with the command below. This creates 500 files of size from 5 – 12 MB.

Import-Module .\Create-RandomFiles.ps1

Create-RandomFiles -NumberOfFiles 500 -Path "C:\MyPath" -MinRandomSize 5000000 -MaxRandomSize 12000000

The second command created lots of small files with 5-300 KB.

Import-Module .\Create-RandomFiles.ps1

Create-RandomFiles -NumberOfFiles 10000 -Path "C:\MyPath" -MinRandomSize 5000 -MaxRandomSize 300000

Why is that? Because we now that there is

Both commands produced something between 1,5-2 GB of data, that is committed to the repositories.

Test Plan

My Test plan is basically to run build pipelines to publish and download pipeline

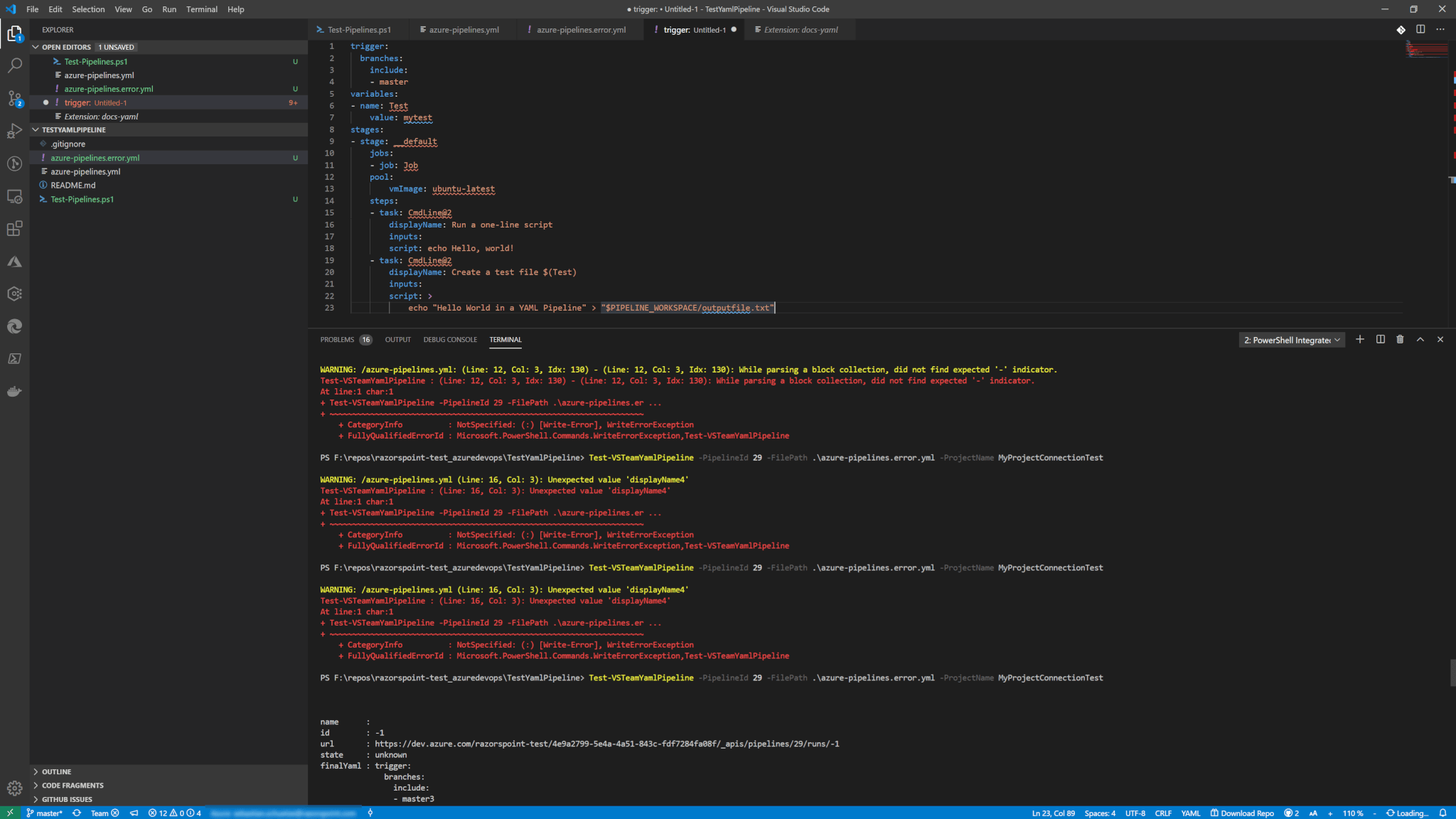

Four different pipelines were used. Two pipelines for small files making use of both approach and two more for large files with the same approach. Each pipeline has a phase where each phase is using another agent. There are currently six different hosted agents pools plus a default one, which contains one private hosted by me in Azure. Below you can see the YAML pipeline template for pipeline

jobs:

- job: ${{parameters.jobname}}

workspace:

clean: all

pool: ${{parameters.pool}}

dependsOn: ${{parameters.dependsOnJobName}}

steps:

- task: PublishPipelineArtifact@0

displayName: 'Publish Pipeline Artifact'

inputs:

artifactName: PipelineArtifact-${{parameters.jobname}}-$(Build.BuildNumber)

targetPath: '$(Build.SourcesDirectory)'

continueOnError: true

- task: DownloadPipelineArtifact@0

displayName: 'Download Pipeline Artifact'

inputs:

artifactName: PipelineArtifact-${{parameters.jobname}}-$(Build.BuildNumber)

targetPath: '$(System.ArtifactsDirectory)'

continueOnError: true

And the pipeline which calls the template above

trigger: none

jobs:

- template: pipeline-artifact-tasks.yml

parameters:

jobname: Hosted

dependsOnJobName: ''

pool:

vmImage: 'vs2015-win2012r2'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Hosted_VS2017'

dependsOnJobName: 'Hosted'

pool:

vmImage: 'vs2017-win2016'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Hosted_Windows_2019_with_VS2019'

dependsOnJobName: 'Hosted_VS2017'

pool:

vmImage: 'windows-2019'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Hosted_macOS'

dependsOnJobName: 'Hosted_Windows_2019_with_VS2019'

pool:

vmImage: 'macOS-10.13'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Hosted_Ubuntu_1604'

dependsOnJobName: 'Hosted_macOS'

pool:

vmImage: 'ubuntu-16.04'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Hosted_Windows_Container'

dependsOnJobName: 'Hosted_Ubuntu_1604'

pool:

vmImage: 'win1803'

- template: pipeline-artifact-tasks.yml

parameters:

jobname: 'Private'

dependsOnJobName: 'Hosted_Windows_Container'

pool:

name: 'default'

You can also check out these pipelines in both repositories if you want to know more about YAML pipelines, check out the introduction (part 1 and part 2 from Damian Brady (Microsoft Cloud Developer Advocate specialized in Azure DevOps). Or check the official documetation.

To run the test I made use of PowerShell and the VSTeam PowerShell module maintained by Donavan Brown. This one is basically calling the REST API of your Azure DevOps organization and making things more efficient for you. So, I used the script below to trigger 100 builds for each build definition.

Each build also has 7 phases. Which means

$buildDefinitionIds = (15,16,17,19) #Inlcude an array of the build definition IDs

$projectName = "#You Pipeline Display Name#"

1..100 | ForEach-Object {

foreach($buildDefId in $buildDefinitionIds){

Add-VSTeamBuild -BuildDefinitionId $buildDefId -ProjectName $projectName

}

}

Note: You still have to log in to your tenant to be able to use this script.

Getting and Evaluating Test Results

So now I had the result of 400 builds with every 7 phases where each phase is having a publish and a download task for the artifacts. Getting statistics effectively as a developer, of course, is done via scripting. Here I used mostly VSTeam as well to handle it.

Since the script is a bit long, I included it into Gist

https://gist.github.com/SebastianSchuetze/83b7320b77e829b0164af776d0b939e6

The script itself created a CSV file, where of course I used Excel to evaluate the results. You can download the excel file if you want and see my evaluation from the statistics together with the raw data.

There is not much left to say, as to try out

Pipeline Artifact yourself! 🙂