Update 5.5.2020: There is now a native integration. Check my new blog post for an update.

The new multi staging pipelines with YAML give you a lot of more flexibility. Variables can be used and shared between nearly every step (e.g. stages and jobs) but they can’t be shared out of the between stages. But hey, there is always a solution.

One great solution is to go with the API and updates the variable you want to reuse. Rob Bos posted this solution.

And I saw also another solution, which I also post here in this blog so it does not get lost when I or somebody else would need it. The VSTeam module that I help Donovan to maintain had recently a PR which suggested to reuse variables between stages. The PR was closed and not merged since we could fix the problem without handling variables cross stages. But that suggestion would get lost and I liked the solution.

YAML Template: Save variables

This template persists the variable as a file into the pipeline workspace. I got the script below from:

parameters:

variableName: ''

steps:

- bash: |

echo "Variable 'variableName' found with value '$VARIABLE_NAME'"

if [ -z "$VARIABLE_NAME" ]; then

echo "##vso[task.logissue type=error;]Missing template parameter \"variableName\""

echo "##vso[task.complete result=Failed;]"

fi

env:

VARIABLE_NAME: ${{ parameters.variableName }}

displayName: Check for required parameters in YAML template

- powershell: |

mkdir -p $(Pipeline.Workspace)/variables

echo "$(${{ parameters.variableName }})" > $(Pipeline.Workspace)/variables/${{ parameters.variableName }}.variable

echo "Variable written to '$(Pipeline.Workspace)/variables/${{ parameters.variableName }}.variable'"

displayName: 'Persist ''${{ parameters.variableName }}'' variable'

YAML Template: Read Variables

This template reads the variable as a file into the pipeline workspace. I got the script below from:

parameters:

variableName: ''

steps:

- bash: |

echo "Variable 'variableName' found with value '$VARIABLE_NAME'"

if [ -z "$VARIABLE_NAME" ]; then

echo "##vso[task.logissue type=error;]Missing template parameter \"variableName\""

echo "##vso[task.complete result=Failed;]"

fi

env:

VARIABLE_NAME: ${{ parameters.variableName }}

displayName: Check for required parameters in YAML template

- bash: |

echo "Reading file '$(Pipeline.Workspace)/variables/${{ parameters.variableName }}.variable'"

cat $(Pipeline.Workspace)/variables/${{ parameters.variableName }}.variable

READ_VAR=$(cat $(Pipeline.Workspace)/variables/${{ parameters.variableName }}.variable)

echo "Read value '$READ_VAR'"

echo "##vso[task.setvariable variable=${{ parameters.variableName }};]$READ_VAR"

displayName: 'Reading ''${{ parameters.variableName }}'' variable'

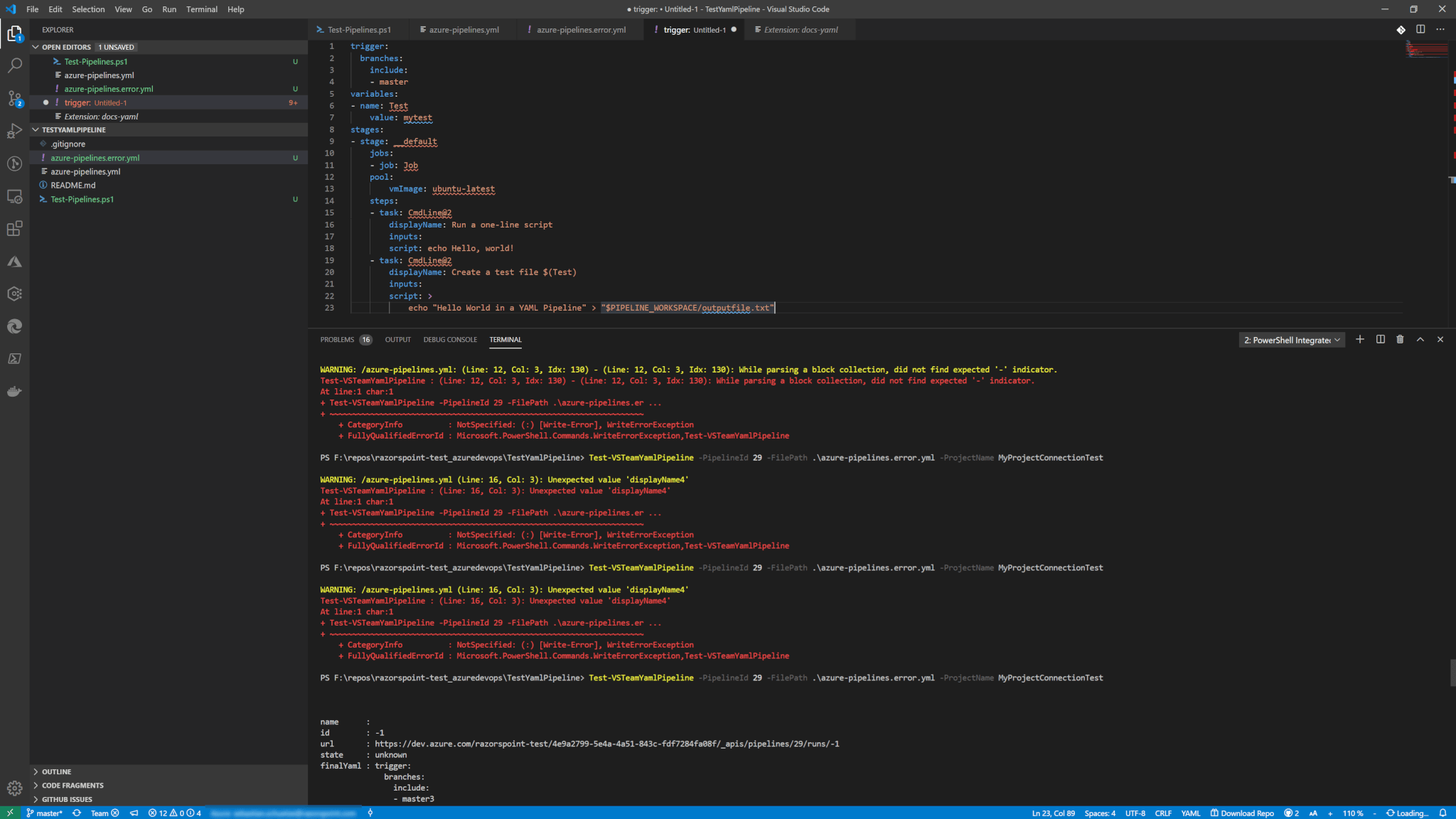

Use Templates in a Pipeline

The approach is, that we read the chosen variable value and save it to a file and publish it as an artifact. Then we reuse the artifact in the next stage. Below you can see an example master YAML pipline to see it in action.

trigger:

- master

resources:

- repo: self

pool:

vmImage: 'ubuntu-16.04'

variables:

MyVar: 'MyVal'

stages:

- stage: Save_Variable

jobs:

- job: Save_Variable

steps:

- pwsh: Write-Host "##vso[task.setvariable variable=MyVar;]NewVal"

- template: ./persist-variable.yml

parameters:

variableName: 'MyVar'

- publish: $(Pipeline.Workspace)/variables

artifact: variables

- stage: Read_Variables

dependsOn: Save_Variable

jobs:

- job: Save_Variable

steps:

- download: current

artifact: variables

- template: ./read-variable.yml

parameters:

variableName: 'MyVar'

Credits

Other than creating the master YAML file I took the idea from Tom Kerkhove who contributed this as a PR to the VSTeam module.

Also published on Medium.